The Complete Guide to 3D Rendering Methods: Techniques, Technologies, and Best Practices

Three-dimensional rendering stands as the cornerstone of modern visual communication, transforming abstract digital models into compelling images that captivate audiences across industries. From architectural visualization to product design, entertainment to medical imaging, the choice of rendering method fundamentally determines the quality, speed, and cost-effectiveness of your visual output.

[web_stories title=”true” excerpt=”false” author=”false” date=”false” archive_link=”true” archive_link_label=”All Web Stories” circle_size=”150″ sharp_corners=”true” image_alignment=”left” number_of_columns=”1″ number_of_stories=”8″ order=”DESC” orderby=”post_title” view=”carousel” /]

This comprehensive guide explores the essential rendering techniques powering today’s digital imagery, examining both time-tested methods and cutting-edge innovations reshaping the industry in 2025. Whether you’re a studio evaluating technologies, a business seeking visualization services, or a professional expanding your technical knowledge, understanding these rendering approaches empowers better decision-making and superior results.

Understanding 3D Rendering: Foundation Concepts

Before examining specific techniques, it’s essential to grasp what rendering actually accomplishes. Three-dimensional rendering converts geometric models—collections of vertices, edges, and polygons existing in mathematical space—into two-dimensional images viewable on screens or in print. This transformation requires sophisticated calculations determining how light interacts with surfaces, how materials appear under various conditions, and which elements remain visible from particular viewpoints.

The rendering process sits at the intersection of mathematics, physics, art, and computer science. Algorithms must solve complex equations governing light behavior while maintaining computational efficiency, all while giving artists sufficient creative control to achieve their vision. Different rendering methods prioritize these considerations differently, creating a diverse ecosystem of techniques suited to varied applications.

Two fundamental categories organize rendering approaches: real-time rendering and offline rendering. Real-time methods prioritize speed, generating images fast enough for interactive applications like video games, virtual reality experiences, and design software where users expect immediate visual feedback. Offline rendering prioritizes quality over speed, taking minutes or hours per frame to achieve photorealistic results for film, advertising, and high-end visualization projects.

Understanding this speed-versus-quality tradeoff guides technique selection. A video game requiring sixty frames per second demands entirely different rendering approaches than an architectural marketing image where a single perfect shot justifies hours of computation.

Rasterization: The Foundation of Real-Time Rendering

Rasterization represents the most prevalent rendering method in interactive applications, powering everything from video games to CAD software, mobile apps to web-based 3D viewers. This technique’s dominance stems from its computational efficiency and hardware optimization, making it the natural choice when speed matters most.

How Rasterization Works

Rasterization converts three-dimensional geometry into pixels through a projection process. The technique takes each polygon in your 3D scene, projects it onto the two-dimensional screen plane, then determines which pixels fall inside that projected shape. For each covered pixel, the renderer calculates color based on material properties, lighting, and texture information.

The process follows a structured pipeline optimized for modern graphics processing units. First, vertex shaders transform geometry from three-dimensional world space into two-dimensional screen coordinates. Next, the rasterizer identifies which pixels each triangle covers. Finally, fragment shaders calculate the final color for each pixel, incorporating textures, lighting calculations, and various visual effects.

This pipeline architecture enables remarkable parallelization. Modern GPUs contain thousands of processing cores that can simultaneously handle different triangles, vertices, and pixels. A single graphics card can rasterize billions of triangles per second, enabling complex scenes to render at interactive frame rates.

Scanline Rendering: The Classic Approach

Within rasterization, scanline rendering deserves special mention as a foundational technique with historical significance and continued relevance. Scanline rendering processes images row by row rather than polygon by polygon, organizing work around horizontal scan lines across the screen.

The method maintains an active edge table tracking which polygon edges intersect the current scanline. As the algorithm progresses down the screen, it updates this table incrementally, adding edges as new polygons appear and removing edges as polygons end. Within each scanline, the renderer fills horizontal spans between edge pairs, creating solid colored regions.

Scanline rendering’s primary advantage is minimizing redundant computations by processing visible pixels exactly once. This efficiency proved crucial when computational resources were severely limited. Early Evans & Sutherland image generators employed scanline techniques in hardware to generate images one raster line at a time without requiring costly framebuffer memory.

While modern GPUs have largely moved to triangle-centric rasterization approaches better suited to parallel processing, scanline principles still influence contemporary rendering architectures, particularly in specialized applications requiring precise control over pixel processing order.

Modern Rasterization Enhancements

Contemporary rasterization extends far beyond simple flat-shaded polygons. Modern pipelines incorporate sophisticated features that dramatically improve visual quality while maintaining real-time performance:

Texture Mapping applies detailed images to 3D surfaces, adding visual complexity without geometric overhead. A simple flat polygon can appear as weathered stone, polished metal, or ornate fabric through appropriate texture application.

Normal Mapping creates the illusion of surface detail by modulating how light reflects off surfaces. This technique makes flat geometry appear to have intricate bumps, dents, and irregularities, multiplying perceived geometric complexity without additional polygons.

Shadow Mapping generates real-time shadows by rendering scenes from light sources’ perspectives, then using this information to determine which areas should appear shadowed from the camera viewpoint.

Screen Space Ambient Occlusion approximates how ambient light is blocked in crevices and corners, adding depth and realism to scenes by darkening areas where surfaces come close together.

Post-Processing Effects apply image-based operations after initial rendering, adding bloom, depth of field, motion blur, color grading, and numerous other cinematic effects that enhance visual appeal.

These enhancements transform basic rasterization into a powerful tool capable of impressive visual quality at interactive speeds. Modern game engines like Unreal Engine and Unity leverage these techniques to create experiences that would have been considered impossible just a decade ago.

Limitations and Considerations

Despite its strengths, rasterization has inherent limitations. The technique struggles with certain optical phenomena that require tracing light paths through scenes. Accurate reflections, refractions, caustics, and global illumination—where light bounces between surfaces—challenge rasterization’s forward-projection approach.

Various workarounds exist, from reflection probes and environment maps to screen-space reflections, but these approximations lack the physical accuracy of ray-traced approaches. As hardware capabilities expand, the industry increasingly combines rasterization’s speed with selective ray tracing for effects requiring greater accuracy.

Ray Tracing: Pursuing Photorealism Through Physics

Ray tracing represents the gold standard for physically accurate rendering, simulating how light actually behaves in the real world. This approach traces paths of light rays as they travel through scenes, bounce off surfaces, pass through transparent materials, and eventually reach the camera. By faithfully modeling light physics, ray tracing achieves realism that rasterization approximates but cannot fully replicate.

The Ray Tracing Process

Ray tracing works backward from the camera. For each pixel in the output image, the renderer casts one or more rays from the camera position through that pixel location into the scene. The algorithm then follows each ray, determining what it hits first.

When a ray intersects an object, the renderer examines material properties at the intersection point. If the surface is reflective, additional rays are cast to determine what reflects in the surface. If it’s transparent, refraction rays trace light bending as it passes through. If it’s rough, multiple rays sample different directions to capture diffuse reflection. Shadow rays check whether light sources are visible from the intersection point.

This recursive ray casting continues until rays either reach light sources, escape the scene, or exhaust a predetermined recursion depth. The accumulated color information from all these ray interactions determines the final pixel color.

Path Tracing: Ray Tracing’s Evolution

Path tracing extends basic ray tracing concepts to achieve even greater physical accuracy. Rather than following deterministic reflection and refraction rules, path tracing stochastically samples possible light paths, gradually building up accurate lighting solutions through statistical averaging.

The technique excels at indirect illumination effects where light bounces multiple times between surfaces before reaching the camera. A room illuminated solely by light bouncing off a red wall will take on a subtle red tint—an effect path tracing captures naturally while rasterization requires extensive approximation.

Path tracing’s main challenge is noise. Since the method samples light paths randomly, initial results appear grainy and speckled. Only after tracing thousands or millions of paths per pixel does the image converge to a clean, noise-free result. This computational intensity makes path tracing impractical for real-time applications, though hardware acceleration is steadily pushing boundaries.

Real-Time Ray Tracing: Bridging the Speed Gap

Real-time ray tracing has revolutionized the gaming and visual effects industries by enabling the creation of highly realistic images and animations. Modern graphics cards from NVIDIA and AMD incorporate dedicated ray tracing cores that accelerate intersection calculations, the most computationally expensive part of ray tracing.

These hardware accelerators enable hybrid rendering approaches that combine rasterization’s speed with selective ray tracing for specific effects. A game might rasterize geometry but use ray tracing exclusively for reflections, shadows, and ambient occlusion. This selective application delivers the most noticeable benefits of ray tracing while maintaining playable frame rates.

NVIDIA’s DLSS technology uses AI to upscale lower-resolution images in real-time, providing higher quality visuals without the performance cost typically associated with high-resolution rendering. By rendering at lower resolution then intelligently upscaling, ray-traced games achieve acceptable performance on consumer hardware.

Ray Tracing in Production

For non-interactive content where rendering time is less critical, ray tracing and path tracing dominate. Feature films, high-end commercials, architectural visualizations, and product renderings typically employ these methods to achieve uncompromising visual quality.

Production renderers like Arnold, V-Ray, Corona, and RenderMan use sophisticated path tracing implementations augmented with numerous optimization techniques. Importance sampling focuses computational effort where it matters most. Adaptive sampling allocates more rays to complex areas while using fewer samples in simple regions. Denoising algorithms clean up remaining noise, allowing acceptable results with fewer samples than pure path tracing would require.

Radiosity: Global Illumination Through Energy Transfer

Radiosity takes a fundamentally different approach to lighting simulation, modeling light as energy transferred between surfaces rather than traced along specific rays. This technique particularly excels at diffuse interreflection—the way light bounces between matte surfaces, gradually distributing illumination throughout environments.

The Radiosity Method

Radiosity operates based on inter-reflection, or the reflection of light between objects. The algorithm subdivides scene surfaces into small patches, then calculates how much light energy each patch receives and reflects. This creates a system of linear equations describing energy balance across all patches.

Solving these equations yields lighting information independent of viewpoint. Once calculated, radiosity solutions can be viewed from any angle without recalculation—a significant advantage for interactive applications where camera movement is common.

The technique naturally handles soft shadows and color bleeding effects that ray tracing must work harder to achieve. A white ceiling lit by a lamp will illuminate a room with soft, diffuse light that gradually fades with distance. Red light bouncing off a colored wall will tint nearby surfaces—exactly the kind of subtle, realistic lighting that makes spaces feel authentic.

Radiosity Limitations and Modern Usage

Radiosity’s view-independence comes with limitations. The method handles only diffuse surfaces well; glossy or mirror-like materials don’t fit the energy-transfer model. Specular highlights, sharp reflections, and refraction effects require different approaches.

Contemporary rendering often combines radiosity principles with other techniques. Photon mapping uses ray tracing to deposit energy packets throughout scenes, then radiosity-like algorithms distribute this energy among surfaces. Irradiance caching precomputes indirect illumination at strategic points, interpolating results across surfaces much like radiosity.

While pure radiosity implementations are less common today, the technique’s insights about light transport continue influencing modern global illumination algorithms that seek to balance accuracy, speed, and versatility.

Photon Mapping: Hybrid Approach to Complex Lighting

Photon mapping bridges ray tracing and radiosity concepts, offering a versatile solution for challenging lighting scenarios. This two-pass technique first simulates light emission from sources, then renders final images using this precomputed lighting information.

How Photon Mapping Operates

During the first pass, the renderer emits photons from light sources, following them as they bounce through the scene. Each time a photon hits a surface, its position and energy are recorded in a spatial data structure called a photon map. Millions of photons gradually build up a detailed representation of light distribution throughout the environment.

The second pass renders final images from the camera perspective. When rays hit surfaces, the renderer queries nearby photons in the photon map to estimate indirect illumination at intersection points. This two-stage process efficiently handles complex lighting phenomena that challenge other methods.

Photon mapping excels at caustics—focused light patterns created by reflective or refractive surfaces. The dancing light patterns at the bottom of swimming pools, bright spots where glass lenses focus sunlight, and intricate reflections from jewelry all emerge naturally from photon mapping without special-case handling.

Practical Applications

Photon mapping finds favor in scenarios requiring specific effects difficult to achieve otherwise. Product visualization of transparent objects like glassware or gemstones benefits from accurate caustic rendering. Architectural scenes with complex natural lighting involving skylights, translucent materials, and reflective surfaces leverage photon mapping’s versatility.

The technique’s main challenge is achieving smooth, noise-free results. Since photon distributions are inherently discrete, directly using photon map data creates blotchy, irregular lighting. Various filtering and density estimation techniques smooth these irregularities, trading some accuracy for visual appeal.

Cloud Rendering: Democratizing Computational Power

The rise of cloud computing has significantly impacted 3D rendering, offering virtually unlimited computing power and storage. Cloud rendering services represent a paradigm shift in how rendering resources are accessed and utilized, removing hardware constraints that traditionally limited what individual artists and small studios could accomplish.

Cloud Rendering Architecture

Cloud rendering services provide access to render farms—massive arrays of computers dedicated to rendering tasks. Rather than purchasing expensive workstations with high-end graphics cards, users upload their scenes to cloud platforms that distribute rendering across hundreds or thousands of machines simultaneously.

This distributed approach dramatically accelerates rendering times. A complex animation that would take a week on a single workstation might complete in hours when distributed across a cloud farm. Projects requiring rapid turnaround or handling unexpected rush work become feasible without maintaining expensive idle infrastructure.

Cloud rendering services enable artists and studios to render complex scenes and animations without the need for expensive local hardware, streamlining workflows and reducing costs. The pay-as-you-go pricing model converts fixed capital expenditures into variable operational costs, improving financial flexibility.

Practical Considerations

Cloud rendering introduces new workflow considerations. Large scene files must be uploaded to cloud platforms, requiring robust internet connectivity. Asset management becomes critical when multiple team members work on projects distributed across cloud resources. Data security and intellectual property protection require careful vendor selection and appropriate agreements.

Popular cloud rendering services like AWS Thinkbox, Google Cloud, Azure, and specialized platforms like RebusFarm, RenderRocket, and Fox Renderfarm offer varying capabilities, pricing models, and software support. Evaluating these services requires considering render engine compatibility, pricing transparency, customer support quality, and performance consistency.

Artificial Intelligence in Rendering: The Emerging Revolution

Artificial Intelligence is at the forefront of transforming the 3D rendering landscape, introducing capabilities that fundamentally change how rendering is approached, accelerated, and perfected.

AI-Accelerated Rendering

The 2024 version of OctaneRender introduced AI-accelerated rendering that uses deep learning algorithms to optimize and expedite the rendering pipeline. These intelligent systems learn patterns in rendering processes, predicting outcomes and optimizing calculations in ways traditional algorithms cannot.

AI-driven denoising techniques can clean up noisy render outputs, resulting in high-quality images faster than traditional methods. Rather than waiting for path tracing to converge through millions of samples, AI denoisers analyze partially converged images and intelligently reconstruct missing detail. What once required hours of computation now finishes in minutes while maintaining comparable quality.

Automated Asset Generation

AI algorithms can analyze reference images and automatically generate textures and materials that are highly realistic, saving time for artists and ensuring consistency in final renders. Machine learning models trained on vast libraries of materials can create new variations, suggest appropriate settings, or automatically categorize assets based on visual characteristics.

AI enables faster iterations through various designs, accelerating the product development process. Generative design powered by AI can explore thousands of design variations based on specified parameters, showing designers options they might never have considered while respecting engineering and aesthetic constraints.

Future AI Integration

Looking ahead, AI promises even deeper rendering integration. Neural rendering techniques replace traditional light transport calculations with trained neural networks that learn scene-specific rendering. These approaches can achieve real-time performance for effects that would be computationally prohibitive with conventional methods.

AI and machine learning will continue to play a pivotal role in 3D rendering, automating many aspects of the rendering process from optimizing rendering algorithms to generating textures and materials. As these technologies mature, the line between what constitutes rendering and what constitutes AI-powered synthesis may increasingly blur.

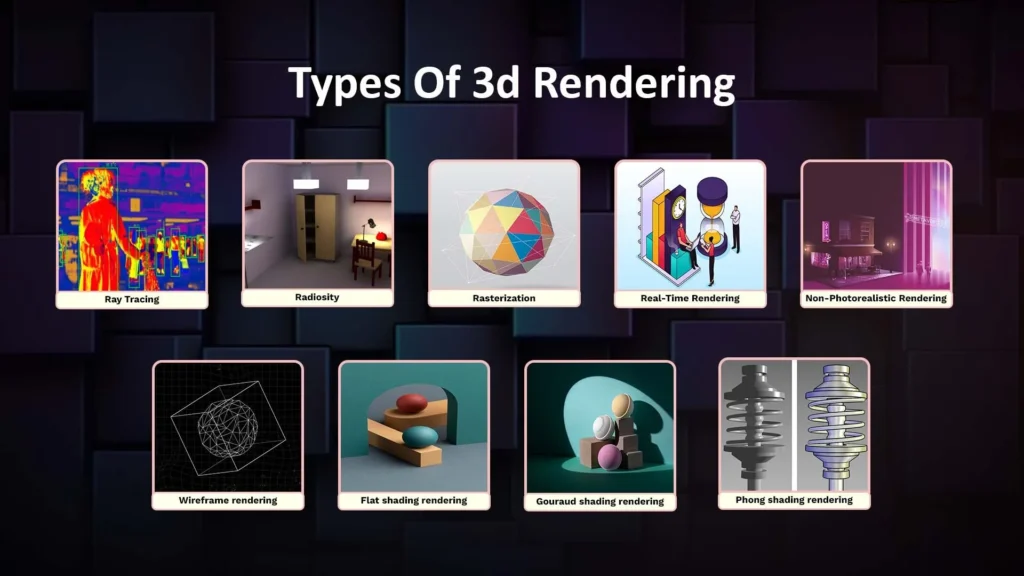

Specialized Rendering Techniques

Beyond mainstream methods, specialized rendering techniques address specific needs or achieve particular aesthetic goals.

Non-Photorealistic Rendering (NPR)

Not all rendering pursues photorealism. Non-photorealistic rendering encompasses techniques that deliberately create stylized, artistic, or abstract visuals. Toon shading creates cartoon-like appearances with discrete color bands rather than smooth gradients. Watercolor rendering simulates traditional painting media. Technical illustration rendering generates clean, diagrammatic images highlighting important features while minimizing visual clutter.

These techniques serve important purposes in educational materials, artistic expression, medical illustration, and user interface design where clarity or aesthetic considerations outweigh photographic accuracy.

Volume Rendering

Volume rendering visualizes three-dimensional scalar fields—data distributed throughout space rather than on surfaces. Medical imaging, scientific visualization, and special effects heavily rely on volume rendering to display phenomena like clouds, smoke, fire, medical scans, and atmospheric effects.

These techniques face unique challenges since volumes lack discrete surfaces to render. Ray marching samples data along ray paths, accumulating color and opacity to create final images. Transfer functions map data values to visual properties, allowing scientists to highlight features of interest within complex datasets.

Real-Time Global Illumination Techniques

Modern game engines employ sophisticated approximations to achieve global illumination effects at interactive frame rates. Techniques like voxel cone tracing, light propagation volumes, and screen-space global illumination provide reasonable approximations of indirect lighting without the computational cost of full path tracing.

These methods represent engineering compromises between accuracy and performance, carefully designed to provide visually plausible results that enhance immersion without crippling frame rates. As hardware capabilities advance, these approximations steadily improve or are replaced by more accurate approaches.

Choosing the Right Rendering Method

Selecting appropriate rendering techniques requires careful consideration of project requirements, constraints, and goals.

Application Requirements

Interactive applications demand real-time methods. Video games, virtual reality experiences, augmented reality applications, and interactive configurators need immediate visual feedback, making rasterization and related real-time techniques the only viable options. Recent hardware advances enable selective ray tracing for specific effects, but core rendering must maintain sufficient frame rates for responsive interaction.

Offline applications afford flexibility to prioritize quality. Architectural visualizations, product renderings, film visual effects, and high-end advertising can employ computationally intensive techniques like path tracing that achieve maximum realism regardless of rendering time.

Quality Expectations

Photorealism requires physics-based rendering approaches. Ray tracing, path tracing, or hybrid methods incorporating radiosity and photon mapping deliver the light interaction accuracy necessary for convincing photographic quality. These techniques properly handle reflections, refractions, caustics, indirect illumination, and subtle material properties that simpler methods approximate inadequately.

Stylized or non-photorealistic aesthetics may achieve better results with simpler, faster methods supplemented by appropriate artistic techniques. Cartoon-style animation, technical illustration, or abstract visualization often benefits more from careful art direction than from physical accuracy.

Budget and Timeline Constraints

Cloud rendering democratizes access to computational power, but costs accumulate. Projects with tight budgets may need to limit rendering complexity, optimize scenes aggressively, or accept longer local rendering times rather than paying for cloud resources.

Timeline constraints often force compromises between desired quality and achievable results within deadlines. Understanding rendering method capabilities and limitations enables realistic planning and appropriate technique selection.

Hardware Considerations

Available hardware fundamentally constrains feasible approaches. Modern GPUs with ray tracing cores open possibilities unavailable on older hardware. Large memory capacity enables higher resolution textures and more complex scenes. Fast storage systems reduce asset loading times in render farms.

Mobile and embedded platforms impose severe constraints requiring highly optimized real-time rendering with aggressive simplification. Web-based applications face additional limitations from browser capabilities and bandwidth constraints.

Industry-Specific Rendering Considerations

Different industries emphasize different rendering priorities and face unique challenges.

Architectural Visualization

Architectural rendering demands photorealism, accurate material representation, and convincing lighting. Path tracing with carefully modeled materials typically delivers best results. Attention to physical accuracy—correct measurements, realistic material properties, accurate environmental lighting—proves critical since viewers intuitively recognize when architectural spaces feel wrong.

Interactive architectural visualization increasingly employs real-time engines with ray tracing acceleration, allowing clients to explore spaces freely while maintaining impressive visual quality. This interactivity enables design refinement before construction begins, providing immense value despite technical challenges.

Product Visualization and E-Commerce

Product rendering for marketing and e-commerce prioritizes material accuracy and presentation flexibility. Customers expect product images to accurately represent what they’ll receive, making physically based materials essential. The ability to quickly generate views from multiple angles, with different material options, in various contexts provides competitive advantage.

Real-time configurators that let customers customize products and see changes instantly leverage GPU rendering to enable fluid interaction while maintaining quality sufficient for purchase decisions.

Entertainment and Gaming

Entertainment rendering divides between real-time games and offline film production. Games optimize relentlessly for performance, employing every trick to achieve target frame rates while maximizing visual impact. Film production pursues uncompromising quality, with render times per frame measured in hours when necessary.

Both domains push technical boundaries, games through real-time innovation and film through computational brute force achieving previously impossible effects. Techniques developed in each domain gradually influence the other as hardware capabilities evolve.

Medical and Scientific Visualization

Medical and scientific rendering prioritizes accuracy and clarity over aesthetic considerations. Volume rendering reveals internal structures from CT and MRI scans. Technical illustration rendering highlights important features while minimizing distracting detail. Animation helps explain complex biological processes or mechanical systems.

These applications require specialized tools and techniques developed specifically for their domains, often prioritizing precision and interpretability over photographic realism.

Rendering Optimization Strategies

Regardless of chosen rendering methods, optimization dramatically impacts productivity and project feasibility.

Scene Optimization

Efficient geometry management prevents unnecessary computational overhead. Use instancing for repeated objects, employ level-of-detail systems that simplify distant geometry, eliminate unseen polygons through occlusion culling, and optimize polygon counts while maintaining visual quality.

Texture optimization balances quality and memory usage. Appropriate resolution prevents both blurriness and unnecessary detail. Texture atlasing combines multiple textures into single images, reducing memory consumption and improving rendering efficiency.

Lighting and Material Optimization

Lighting complexity directly impacts rendering time. Minimize light source count while maintaining desired illumination quality. Use global illumination techniques appropriate to your rendering method and quality requirements. Pre-bake static lighting when possible, reserving dynamic lighting for elements that actually change.

Material simplification without visible quality loss accelerates rendering. Eliminate unnecessary shader complexity, optimize texture sample counts, and use appropriate simplifications for distant or less important surfaces.

Render Settings Balance

Rendering settings offer numerous tradeoffs between quality and speed. Sample counts in path tracing, ray bounce limits, resolution settings, anti-aliasing quality, and shadow quality all impact both visual results and render times. Understanding these tradeoffs enables informed decisions that optimize the quality-to-time ratio.

Workflow Efficiency

Technical optimization extends beyond individual renders. Efficient workflows minimize wasted effort through iterative preview rendering at lower quality, progressive refinement focusing detail where needed, batch processing of similar renders, and asset reuse across projects.

The Future of 3D Rendering

Rendering technology continues evolving rapidly, with several clear trends shaping the industry’s future.

Hardware Acceleration Expansion

Dedicated rendering hardware continues advancing. Ray tracing cores are becoming standard in consumer graphics cards. Specialized AI accelerators enable neural network integration. Memory bandwidth and capacity improvements support higher resolutions and more complex scenes.

This hardware evolution enables techniques previously relegated to offline rendering to achieve real-time performance, gradually closing the gap between interactive and production-quality rendering.

AI Integration Deepening

Predictions for the next five years show increased use of AI and machine learning continuing to play a pivotal role in 3D rendering, automating many aspects of the rendering process. Beyond current denoising and upscaling applications, AI will increasingly participate in light transport calculation, material generation, and scene optimization.

Neural rendering approaches may eventually compete with or complement traditional physically based methods, learning scene-specific rendering solutions that achieve comparable quality at radically improved speeds.

Real-Time Ray Tracing Maturation

Real-time ray tracing capabilities will continue improving as hardware accelerates and algorithms optimize. The hybrid approaches combining rasterization and selective ray tracing will gradually shift toward increasingly comprehensive ray-traced pipelines as performance permits.

As hardware and software technologies advance, we can expect even more realistic and immersive 3D rendered experiences. The distinction between real-time and offline rendering quality will progressively narrow.

Virtual and Augmented Reality Impact

Augmented, artificial, and virtual reality rendering have shaped the world and the future of architectural design presentation, allowing architects, developers, contractors, and homeowners to visualize aspects and interact with the virtual world. These immersive technologies demand specialized rendering approaches that maintain quality while meeting stringent performance requirements for comfortable user experiences.

Foveated rendering that concentrates detail where users look, innovative reprojection techniques, and specialized optimizations for head-mounted displays represent just the beginning of VR/AR-specific rendering innovation.

Sustainability Considerations

Trends in 2024 focus on reducing the environmental footprint of rendering processes, with energy-efficient algorithms and hardware being embraced to minimize energy consumption. As rendering computational demands grow, power consumption and environmental impact receive increasing attention.

Efficient algorithms, optimized workflows, and renewable energy powered render farms address sustainability concerns while maintaining creative capabilities. This balance between computational power and environmental responsibility will increasingly influence rendering technology development.

Conclusion: Mastering the Rendering Landscape

Understanding 3D rendering methods empowers better decisions across the entire visualization pipeline. Whether evaluating visualization services, planning project workflows, or expanding technical expertise, knowledge of rendering techniques, their strengths, limitations, and appropriate applications proves invaluable.

The rendering landscape offers no universal best method. Rasterization’s real-time performance, ray tracing’s physical accuracy, hybrid approaches balancing speed and quality, and emerging AI-enhanced techniques each serve distinct purposes. Project requirements, quality expectations, timeline constraints, and budget realities guide appropriate technique selection.

As rendering technology continues evolving, maintaining awareness of emerging capabilities and industry trends enables leveraging new possibilities as they become practical. The gap between what’s possible and what’s achievable at reasonable cost and speed continues narrowing, expanding creative possibilities across all industries relying on visual communication.

For businesses seeking professional rendering services, understanding these methods enables informed discussions with visualization partners, realistic expectation setting, and appropriate specification of deliverable requirements. For studios and artists, technical mastery across multiple rendering approaches provides versatility to tackle diverse projects efficiently.

The future of rendering promises continued democratization through cloud computing, quality improvements through AI integration, and expanded real-time capabilities through hardware acceleration. These advances will enable more creators to achieve higher quality results more efficiently, ultimately enriching visual communication across every domain that rendering touches.

Ready to leverage cutting-edge rendering technology for your next project? Chasing Illusions Studio combines 15 years of rendering expertise with a team of 80+ skilled animators proficient across all major rendering methods. From real-time configurators to photorealistic architectural visualizations, we deliver exceptional results optimized for your specific needs and budget. Contact us today to discuss how advanced rendering techniques can transform your vision into compelling reality.

For faster response contact on WhatsApp